Stories act as a ‘pidgin language,’ where both sides (users and developers) can agree enough to work together effectively.

—Bill Wake, co-inventor of Extreme Programming

Story

Stories are short descriptions of a small piece of desired functionality, written in the user’s language. Agile Teams implement small, vertical slices of system functionality and are sized so they can be completed in a single Iteration.Stories are the primary artifact used to define system behavior in Agile. They are short, simple descriptions of functionality usually told from the user’s perspective and written in their language. Each one is intended to enable the implementation of a small, vertical slice of system behavior that supports incremental development.

Stories provide just enough information for both business and technical people to understand the intent. Details are deferred until the story is ready to be implemented. Through acceptance criteria and acceptance tests, stories get more specific, helping to ensure system quality.

User stories deliver functionality directly to the end user. Enabler stories bring visibility to the work items needed to support exploration, architecture, infrastructure, and compliance.

Details

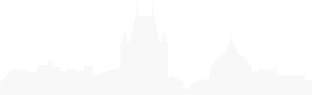

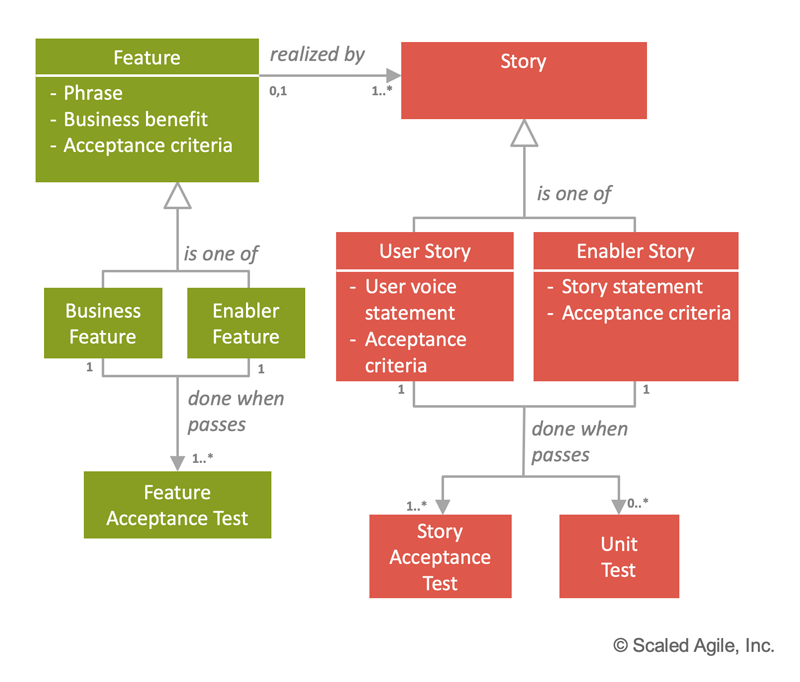

SAFe’s Requirements Model describes a four-tier hierarchy of artifacts that outline functional system behavior: Epic, Capability, Feature, and story. Collectively, they describe all the work to create the solution’s intended behavior. But the detailed implementation work is described through stories, which make up the Team Backlog. Most stories emerge from business and enabler features in the Program Backlog, but others come from the team’s local context.

Each story is a small, independent behavior that can be implemented incrementally and provides some value to the user or the Solution. It’s a vertical slice of functionality to ensure that every Iteration delivers new value. Stories are small and must be completed in a single iteration (see the splitting stories section).

Often, stories are first written on an index card or sticky note. The physical nature of the card creates a tangible relationship between the team, the story, and the user: it helps engage the entire team in story writing. Sticky notes offer other benefits as well: they help visualize work and can be readily placed on a wall or table, rearranged in sequence, and even passed off when necessary. Stories allow improved understanding of the scope and progress:

- “Wow, look at all these stories we are about to sign up for” (scope)

- “Look at all the stories we accomplished in this iteration” (progress)

While anyone can write stories, approving them into the team backlog and accepting them into the system baseline are the responsibility of the Product Owner. Of course, stickies don’t scale well across the Enterprise, so stories often move quickly into Agile Lifecycle Management (ALM) tooling.

There are two types of stories in SAFe, user stories and enabler stories, as described below.

Sources of Stories

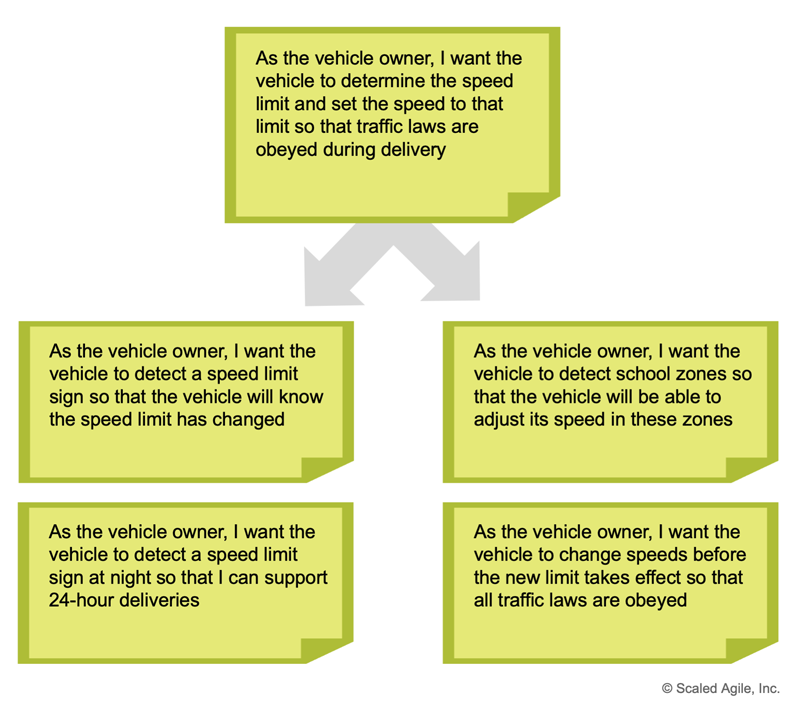

Stories are typically driven by splitting business and enabler features, as Figure 1 illustrates.

User Stories

User stories are the primary means of expressing needed functionality. They largely replace the traditional requirements specification. In some cases, however, they serve as a means to explain and develop system behavior that’s later recorded in specifications that support compliance, suppliers, traceability, or other needs.

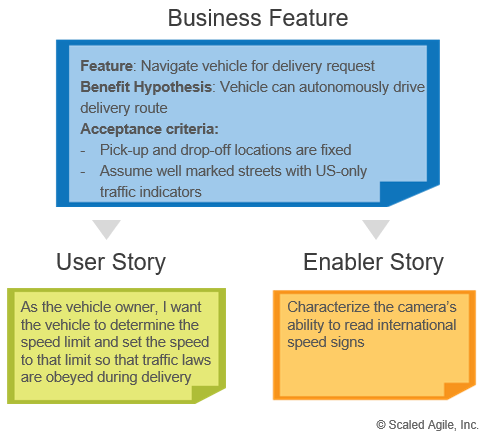

Because they focus on the user as the subject of interest, and not the system, user stories are value and customer-centric. To support this, the recommended form of expression is the ‘user-voice form’, as follows:

As a (user role), I want to (activity), so that (business value)

By using this format, the teams are guided to understand who is using the system, what they are doing with it, and why they are doing it. Applying the ‘user voice’ format routinely tends to increase the team’s domain competence; they come to better understand the real business needs of their user. Figure 2 provides an example.

As described in Design Thinking, personas describe specific characteristics of representative users that help teams better understand their end user. Example personas for the rider in Figure 2 could be a thrill-seeker ‘Jane’ and a timid rider ‘Bob’. Stories descriptions can then reference these personas (As Jane I want…).

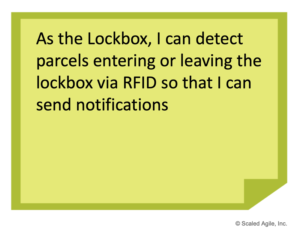

While the user story voice is the common case, not every system interacts with an end user. Sometimes the ‘user’ is a device (e.g., printer) or a system (e.g., transaction server). In these cases, the story can take on the form illustrated in Figure 3.

Enabler Stories

Teams also develop the new architecture and infrastructure needed to implement new user stories. In this case, the story may not directly touch any end user. Teams use ‘enabler stories’ to support exploration, architecture, or infrastructure. Enabler stories can be expressed in technical rather than user-centric language, as Figure 4 illustrates.

There are many other types of Enabler stories including:

- Refactoring and Spikes (as traditionally defined in XP)

- Building or improving development/deployment infrastructure

- Running jobs that require human interaction (e.g., index 1 million web pages)

- Creating the required product or component configurations for different purposes

- Verification of system qualities (e.g., performance and vulnerability testing)

Enabler stories are demonstrated just like user stories, typically by showing the knowledge gained, artifacts produced, or the user interface, stub, or mock-up.

Writing Good Stories

Good stories require multiple perspectives. In agile, the entire team – Product Owner, developers, and testers – create a shared understanding of what to build to reduce rework and increase throughput. Teams collaborate using Behavior-Driven Development (BDD) to define detailed acceptance tests that definitively describe each story. Good stories require multiple perspectives:

- Product Owners provides customer thinking for viability and desirability

- Developers provide technical feasibility

- Testers provide broad thinking for exceptions, edge cases, and other unexpected ways users may interact with system

Collaborative story writing ensures all perspectives are addressed and everyone agrees on the story’s behavior with the results represented in the story’s description, acceptance criteria, and acceptance tests. The acceptance tests are written using the system’s domain language with Behavior-Driven Development (BDD). BDD tests are then automated and run continuously to maintain Built-In Quality. The BDD tests are written against system requirements (stories) and therefore can be used as the definitive statement for the system’s behavior, replacing document-based specifications.

The 3Cs: Card, Conversation, Confirmation

Ron Jeffries, one of the inventors of XP, is credited with describing the 3Cs of a story:

- Card – Captures the user story’s statement of intent using an index card, sticky note, or tool. Index cards provide a physical relationship between the team and the story. The card size physically limits story length and premature suggestions for the specificity of system behavior. Cards also help the team ‘feel’ upcoming scope, as there is something materially different about holding ten cards in one’s hand versus looking at ten lines on a spreadsheet.

- Conversation – Represents a “promise for a conversation” about the story between the team, customer (or the customer’s proxy), the PO (who may be representing the customer), and other stakeholders. The discussion is necessary to determine more detailed behavior required to implement the intent. The conversation may spawn additional specificity in the form of acceptance criteria (the confirmation below) or attachments to the user story. The conversation spans all steps in the story life cycle:

- Backlog refinement

- Planning

- Implementation

- Demo

These just-in-time discussions create a shared understanding of the scope that formal documentation cannot provide. Specification by example replaces detailed documentation. Conversations also help uncover gaps in user scenarios and NFRs.

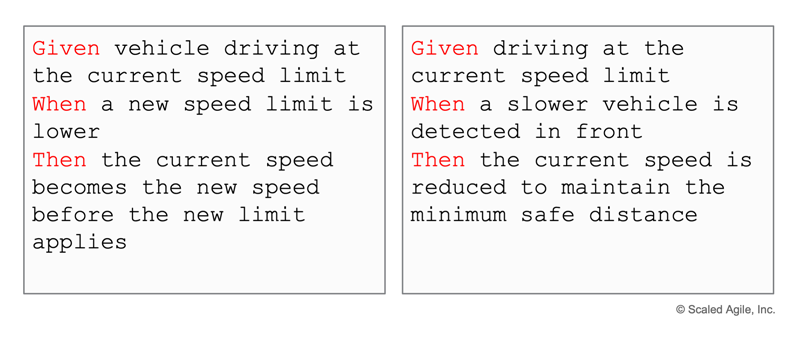

- Confirmation – The acceptance criteria provides the information needed to ensure that the story is implemented correctly and covers the relevant functional and NFRs. Figure 5 provides an example. Some teams often use the confirmation section of the story card to write down what they will demo.

Agile teams automate acceptance tests wherever possible, often in business-readable, domain-specific language. Automation creates an executable specification to validate and verify the solution. Automation also provides the ability to quickly regression-test the system, enhancing Continuous Integration, refactoring, and maintenance.

Investing in Good Stories

Agile teams spend a significant amount of time discovering, elaborating, and understanding user stories and writing acceptance tests This is as it should be, because it represents the fact that:

Writing the code for an understood objective is not necessarily the hardest part of software development,

rather it is understanding what the real objective for the code is. Therefore, investing in good user stories, albeit at the last responsible moment, is a worthy effort for the team. Bill Wake, coined the acronym INVEST [1], to describe the attributes of a good user story.

- I – Independent (among other stories)

- N – Negotiable (a flexible statement of intent, not a contract)

- V – Valuable (providing a valuable vertical slice to the customer)

- E – Estimable (small and negotiable)

- S – Small (fits within an iteration)

- T – Testable (understood enough to know how to test it)

Splitting Good Stories

Smaller stories allow faster, more reliable implementation, since small items flow through any system faster, with less variability, and reduced risk. Therefore, splitting bigger stories into smaller ones is a mandatory skill for every Agile team. It’s both the art and the science of incremental development. Ten ways to split stories are described in Agile Software Requirements [1]. A summary of these techniques follows:

- Workflow steps

- Business rule variations

- Major effort

- Simple/complex

- Variations in data

- Data entry methods

- Deferred system qualities

- Operations (ex., Create, Read, Update, Delete [CRUD])

- Use-case scenarios

- Break-out spike

Figure 6 illustrates an example of splitting by use-case scenarios.

[scd_56781 title=”icon-note” css_classes=”brand-primary” ] Note: A more detailed description of user stories can be found in the whitepaper, a User Story Primer, which you can download here.

Estimating Stories

Agile teams use story points and ‘estimating poker’ to value their work [1, 2]. A story point is a singular number that represents a combination of qualities:

- Volume – How much is there?

- Complexity – How hard is it?

- Knowledge – What’s known?

- Uncertainty – What’s unknown?

Story points are relative, without a connection to any specific unit of measure. The size (effort) of each story is estimated relative to the smallest story, which is assigned a size of ‘one.’ A modified Fibonacci sequence (1, 2, 3, 5, 8, 13, 20, 40, 100) is applied that reflects the inherent uncertainty in estimating, especially large numbers (e.g., 20, 40, 100) [2].

Estimating Poker

Agile teams often use ‘estimating poker,’ which combines expert opinion, analogy, and disaggregation to create quick but reliable estimates. Disaggregation refers to splitting a story or features into smaller, easier to estimate pieces.

(Note that there are a number of other methods used as well.) The rules of estimating poker are:

- Participants include all team members.

- Each estimator is given a deck of cards with 1, 2, 3, 5, 8, 13, 20, 40, 100, ∞, and,?

- The PO participates but does not estimate.

- The Scrum Master participates but does not estimate unless they are doing actual development work.

- For each backlog item to be estimated, the PO reads the description of the story.

- Questions are asked and answered.

- Each estimator privately selects an estimating card representing his or her estimate.

- All cards are turned over at the same time to avoid bias and to make all estimates visible.

- High and low estimators explain their estimates.

- After a discussion, each estimator re-estimates by selecting a card.

- The estimates will likely converge. If not, the process is repeated.

Some amount of preliminary design discussion is appropriate. However, spending too much time on design discussions is often wasted effort. The real value of estimating poker is to come to an agreement on the scope of a story. It’s also fun!

Velocity

The team’s velocity for an iteration is equal to the sum of the points for all the completed stories that met their Definition of Done (DoD). As the team works together over time, their average velocity (completed story points per iteration) becomes reliable and predictable. Predictable velocity assists with planning and helps limit Work in Process (WIP), as teams don’t take on more stories than their historical velocity would allow. This measure is also used to estimate how long it takes to deliver epics, features, capabilities, and enablers, which are also forecasted using story points.

Capacity

Capacity is the portion of the team’s velocity that is actually available for any given iteration. Vacations, training, and other events can make team members unavailable to contribute to an iteration’s goals for some portion of the iteration. This decreases the maximum potential velocity for that team for that iteration. For example, a team that averages 40 points delivered per iteration would adjust their maximum velocity down to 36 if a team member is on vacation for one week. Knowing this in advance, the team only commits to a maximum of 36 story points during iteration planning. This also helps during PI Planning to forecast the actual available capacity for each iteration in the PI so the team doesn’t over-commit when building their PI Objectives.

Starting Baseline for Estimation

In standard Scrum, each team’s story point estimating—and the resulting velocity—is a local and independent concern. At scale, it becomes difficult to predict the story point size for larger epics and features when team velocities can vary wildly. To overcome this, SAFe teams initially calibrate a starting story point baseline where one story point is defined roughly the same across all teams. There is no need to recalibrate team estimation or velocity. Calibration is performed one time when launching new Agile Release Trains.

Normalized story points provide a method for getting to an agreed starting baseline for stories and velocity as follows:

- Give every developer-tester on the team eight points for a two-week iteration (one point for each ideal workday, subtracting 2 days for general overhead).

- Subtract one point for every team member’s vacation day and holiday.

- Find a small story that would take about a half-day to code and a half-day to test and validate. Call it a ‘one.’

- Estimate every other story relative to that ‘one.’

Example: Assuming a six-person team composed of three developers, two testers, and one PO, with no vacations or holidays, then the estimated initial velocity = 5 × 8 points = 40 points/iteration. (Note: Adjusting a bit lower may be necessary if one of the developers and testers is also the Scrum Master.)

In this way, story points are somewhat comparable across teams. Management can better understand the cost for a story point and more accurately determine the cost of an upcoming feature or epic.

While teams will tend to increase their velocity over time—and that’s a good thing— in reality, the number tends to remain stable. A team’s velocity is far more affected by changing team size and technical context than by productivity variations.

Stories in the SAFe Requirements Model

As described in the SAFe Requirements Model article, the Framework applies an extensive set of artifacts and relationships to manage the definition and testing of complex systems in a Lean and Agile fashion. Figure 7 illustrates the role of stories in this larger picture.

At scale, stories are often (but not always) created by new features. And each story has acceptance tests and likely unit tests. Unit tests primarily serve to ensure that the technical implementation of the story is correct. Also, this is a critical starting point for test automation, as unit tests are readily automated, as described in the Test-Driven Development (TDD) article.

(Note: Figure 7 uses Unified Modeling Language (UML) notation to represent the relationships between the objects: zero to many (0..*), one to many (1..*), one to one (1), and so on.)

Learn More

[1] Leffingwell, Dean. Agile Software Requirements: Lean Requirements Practices for Teams, Programs, and the Enterprise. Addison-Wesley, 2011. [2] Cohn, Mike. User Stories Applied: For Agile Software Development. Addison-Wesley, 2004.

Last update: 1 July 2021